51 Operating System Interview Questions and Detailed Answers

Do you know what allows you to interact with hardware and software seamlessly? The answer is Operating Systems (OS) - which have become the backbone of every modern computing environment. As a software engineer, a strong understanding of OS concepts is important. However, nailing an OS interview can be challenging, especially when faced with a barrage of technical questions.

To help you prepare, we've curated a list of the top 51 OS interview questions along with detailed answers.

Hope these questions will help you gain the confidence to become successful.

Question 1: What is an operating system?

An operating system (OS) is system software. It manages computer hardware, software resources, and provides common services for computer programs. OS acts as an interface between the user and the computer hardware, enabling efficient execution of applications. Common tasks performed by an OS include process management, memory management, file system management, and handling input/output operations. Examples of operating systems include Windows, Linux, macOS, and Android.

Question 2: What are the main functions of an operating system?

The main functions of an operating system are:

Process Management: Manages the execution of processes, including scheduling, creation, termination, and resource allocation.

Memory Management: Allocates and deallocates memory space as needed by programs.

File System Management: Handles the storage, retrieval, organization, and security of files on storage devices.

Device Management: Manages hardware devices through device drivers and facilitates communication between devices and applications.

Security and Access Control: Protects system resources and user data by enforcing authentication, authorization, and access controls.

Error Detection and Handling: Monitors the system for errors and takes corrective actions to smooth functioning.

User Interface: Provides a user-friendly interface, such as a command-line or graphical user interface (GUI), to interact with the system.

Resource Management: Efficiently manages system resources like CPU, memory, and I/O devices to optimize performance.

Question 3: Explain the difference between kernel and user mode.

| Aspect | Kernel Mode | User Mode |

| Access Level | Full access to all system resources, including hardware and memory. | Restricted access to hardware and system resources. |

| Purpose | Executes critical tasks like hardware management and privileged instructions. | Runs application programs and non-critical processes. |

| Error Impact | Errors can crash the entire system. | Errors typically affect only the specific running process. |

| Hardware Access | Direct access to hardware is allowed. | Access to hardware is indirect and must go through the operating system. |

| System Usage | Used by the operating system kernel and device drivers. | Used by application programs and some system processes. |

| Security | Operates at a high privilege level with no restrictions. | Operates at a low privilege level to ensure stability and security. |

Question 4: What is a system call?

A system call is a mechanism that permits a program running in user mode to request services or resources from the operating system kernel. It acts as an interface between user applications and the operating system, enabling programs to perform low-level operations like file manipulation, process management, or communication with hardware.

Question 5: What are the types of operating systems?

The main types of operating systems are:

Batch Operating Systems: Executes jobs in batches without user interaction, commonly used in early computers.

Example: IBM Mainframe OS.

Time-Sharing Operating Systems: Multiple users can access a system simultaneously by sharing CPU time.

Example: UNIX.

Distributed Operating Systems: Manages a group of networked computers. They work as a single system.

Example: Apache Hadoop.

Real-Time Operating Systems (RTOS): Designed for systems requiring strict timing constraints, often in embedded systems.

Example: FreeRTOS.

Network Operating Systems: Manages and supports network resources and communication among devices.

Example: Microsoft Windows Server.

Mobile Operating Systems: Designed specifically for mobile devices, focusing on touch interfaces and energy efficiency.

Example: Android, iOS.

Embedded Operating Systems: Built for specific hardware systems with limited resources, commonly used in IoT devices.

Example: VxWorks.

Multiprocessor Operating Systems: Supports multiple CPUs, providing parallelism and increased performance.

Example: Solaris.

Question 6: What is the difference between a monolithic kernel and a microkernel?

| Aspect | Monolithic Kernel | Microkernel |

| Structure | All OS services (e.g., file system, memory management) run in kernel mode. | Core functionality runs in kernel mode; other services run in user mode. |

| Size | Large, as it includes many services within the kernel. | Small, containing only essential services (e.g., IPC, CPU scheduling). |

| Performance | Faster due to fewer context switches and system calls. | Slower due to more context switches between user and kernel modes. |

| Reliability | Less reliable; a bug in any service can crash the entire system. | More reliable; faults in services affect only that service, not the kernel. |

| Complexity | More complex to develop and maintain. | Simpler kernel but requires more effort to manage separate services. |

| Examples | Linux, Windows, UNIX. | Minix, QNX, Mach. |

Question 7: What is the purpose of an interrupt?

The purpose of an interrupt is to signal the CPU to temporarily halt its current operations and execute a specific task or respond to an event. Key Roles of Interrupts:

Event Notification: Alerts the CPU about hardware or software events (e.g., keyboard input, I/O completion).

Resource Utilization: Prevents the CPU from wasting time by allowing it to perform other tasks while waiting for events.

Priority Handling: Handling critical tasks by assigning priority to important interrupts.

Real-Time Response: Timely responses to external events or hardware signals.

System Efficiency: Facilitates multitasking by coordinating the CPU’s attention across multiple processes and devices.

Question 8: Explain the concept of a daemon process.

A daemon process is a background process that runs continuously without direct user interaction. It performs system-related tasks such as managing hardware, services, or periodic jobs. Daemons are initiated during system startup and often run with elevated privileges. They operate independently of terminal sessions, and their parent process is usually the init process or its equivalent.

Question 9: What are the differences between 32-bit and 64-bit operating systems?

| Feature | 32-bit Operating System | 64-bit Operating System |

| Architecture | Uses 32-bit processor architecture | Uses 64-bit processor architecture |

| Memory Addressing | Supports up to 4 GB of RAM | Supports large amounts of RAM (up to 16 exabytes theoretically) |

| Performance | Handles less data per clock cycle | Handles more data, better for memory-intensive tasks |

| Application Support | Runs only 32-bit applications | Runs both 32-bit and 64-bit applications |

| Security | Limited advanced security features | Enhanced security, including hardware-based DEP and ASLR |

Question 10: What is the role of a shell in an OS?

A shell in an operating system plays a role as an interface between the user and the system's kernel. It interprets user commands, executes them, and displays the results. The shell can operate in two modes: interactive, where it processes commands entered by the user, and scripting, where it runs a sequence of commands from a file. It facilitates file manipulation, program execution, and system management tasks. Common types of shells include command-line interfaces (e.g., Bash) and graphical shells.

Question 11: What is a process?

A process is an instance of a program in execution. It consists of the program code, its current activity, and allocated resources like memory, registers, and file handles. A process operates in a separate address space and goes through various states (e.g., ready, running, waiting) during its lifecycle. The operating system manages processes by scheduling them, resource allocation, and facilitating communication between them.

Question 12: Explain the difference between a process and a thread.

| Aspect | Process | Thread |

| Definition | An independent instance of a program in execution. | A lightweight sub-task within a process. |

| Memory | Has its own separate memory space. | Shares memory and resources with other threads in the same process. |

| Overhead | Higher overhead for creation and management. | Lower overhead, faster to create and manage. |

| Communication | Requires inter-process communication (IPC) mechanisms. | Can communicate directly by accessing shared memory. |

| Crash Impact | Failure of one process does not affect others. | Failure of a thread can affect the entire process. |

| Execution | Can run independently. | Runs as part of the parent process. |

Question 13: What is context switching?

Context switching is the process of saving the state of a currently running process or thread and restoring the state of another process or thread to resume its execution.

During a context switch, the operating system saves the current process's CPU register values, program counter, and other state information, then loads the saved state of the next process or thread to be executed. Although necessary for multitasking, context switching incurs overhead and can impact system performance.

Question 14: Define process states and explain them.

A process goes through several states during its lifecycle, managed by the operating system. The process states are:

New: The process is being created but has not yet started execution.

Ready: The process is prepared to run and waiting for CPU allocation.

Running: The process is actively executing on the CPU.

Waiting (or Blocked): The process is waiting for an external event (e.g., I/O completion) before it can proceed.

Terminated: The process has completed execution or has been explicitly stopped, and its resources are released.

Question 15: What is a process control block (PCB)?

A Process Control Block (PCB) is a data structure that stores essential information about a process, including its ID, state, CPU registers, program counter, memory management details, and I/O status. It is used by the operating system to manage processes and facilitate context switching.

Question 16: What is multithreading?

Multithreading is a process that is divided into smaller units called threads, which can run concurrently. Each thread is a sequence of executable instructions that operate within the same process, sharing resources such as memory, files, and data.

In a multithreaded application, multiple threads can execute independently but share the process's resources. It improves performance and responsiveness, especially on multicore processors, where threads can be distributed across different cores for parallel execution.

Question 17: Explain the difference between user-level and kernel-level threads.

| Aspect | User-Level Threads | Kernel-Level Threads |

| Management | Managed by user-level libraries in user space. | Managed directly by the operating system kernel. |

| Switching Overhead | Context switching is faster as it does not involve the kernel. | Context switching is slower as it requires kernel intervention. |

| OS Support | OS is unaware of user-level threads. | Fully supported by the OS. |

| Concurrency | Multiple threads in a process share a single kernel thread. | Each thread has its own kernel thread, enabling true parallelism on multi-core systems. |

| Portability | Portable across different operating systems. | Less portable due to OS-specific implementation. |

| Blocking | If one user-level thread blocks, the entire process may block. | If one kernel-level thread blocks, others can continue execution. |

| Performance | Better performance in thread creation and management. | Slower performance due to kernel involvement. |

Question 18: What is a deadlock?

A deadlock is a situation in an operating system where a set of processes becomes permanently blocked because each process is waiting for a resource that another process in the set is holding. As a result, none of the processes can proceed, and the system cannot make progress. Deadlocks typically occur when multiple processes hold resources and simultaneously request additional resources, creating a cycle of dependency.

Question 19: What are the necessary conditions for a deadlock to occur?

The necessary conditions for a deadlock to occur are:

Mutual Exclusion: At least one resource must be held in a non-shareable mode, meaning only one process can use the resource at a time.

Hold and Wait: A process holding at least one resource is waiting to acquire additional resources that are currently held by other processes.

No Preemption: Resources cannot be forcibly taken from a process; they must be released voluntarily by the holding process.

Circular Wait: A set of processes forms a circular chain where each process is waiting for a resource held by the next process in the chain.

These four conditions must occur simultaneously for a deadlock to take place.

Question 20: How can deadlocks be prevented?

Deadlocks can be prevented by at least one of the necessary conditions for deadlock (mutual exclusion, hold and wait, no preemption, circular wait) does not hold. Strategies include:

Eliminate Mutual Exclusion: Design resources to be shareable wherever possible, so multiple processes can access them simultaneously.

Avoid Hold and Wait: Require processes to request all needed resources at once and only proceed when all are available, or require processes to release all held resources before requesting new ones.

Allow Preemption: Permit the system to forcibly take resources from a process if needed to avoid or break a deadlock.

Avoid Circular Wait: Impose a fixed ordering on resource acquisition. Processes request resources in a predefined sequence to prevent circular dependencies.

These methods proactively address deadlock risks but may impact system efficiency or resource utilization.

Question 21: What is virtual memory?

Virtual memory is a memory management technique used by operating systems to simulate a larger amount of memory than is physically available in RAM. It works by mapping virtual addresses used by processes to physical memory or, when needed, to disk storage. So, the system can run larger applications or multiple processes simultaneously by storing inactive portions of memory on the disk and loading them into RAM as required.

Question 22: Explain paging and segmentation.

Paging is a memory management technique. In it, the process's logical memory is divided into fixed-size blocks called pages, and physical memory is divided into blocks of the same size called frames. Pages are mapped to available frames using a page table, which maintains the mapping information. This approach eliminates external fragmentation because any available frame can store any page, regardless of its location in memory. However, paging may cause internal fragmentation if a page is not fully utilized. Paging is hardware-supported and transparent to the program, meaning processes do not need to manage memory allocation directly.

Segmentation divides memory into variable-sized blocks called segments, which correspond to logical divisions of a program, such as functions, arrays, or data structures. Each segment is identified by a segment number and its size. The operating system maintains a segment table that stores the base and limit addresses for each segment. Segmentation aligns with the logical structure of programs, making it easier to manage related data and code. However, it can lead to external fragmentation because segments vary in size, and fitting them into memory becomes challenging as free spaces become scattered.

Question 23: What is a page fault?

A page fault occurs when a program tries to access a memory page that is not currently available in RAM. It triggers an interrupt, and the operating system steps in to handle the situation.

First, the OS checks whether the memory reference is valid. If it is valid, the required page is fetched from secondary storage (like a hard drive or SSD) and loaded into RAM. The operating system then updates the page table to reflect the new location of the page in memory.

Finally, the program resumes execution with the requested page now available. If the memory reference is invalid, the OS generates an error, such as a segmentation fault.

Question 24: What is the difference between internal and external fragmentation?

| Aspect | Internal Fragmentation | External Fragmentation |

| Definition | Wasted memory within an allocated block due to fixed-sized memory allocation. | Wasted memory caused by scattered free spaces that cannot be used for large allocations. |

| Cause | Occurs when the allocated block size exceeds the actual memory requested by a process. | Occurs when free memory is fragmented into non-contiguous blocks. |

| Example | A process requests 5 KB but is allocated 10 KB, leaving 5 KB unused within the block. | Multiple small free blocks exist, but a process requiring 10 KB cannot be allocated. |

| Occurs In | Fixed-sized memory allocation systems. | Variable-sized memory allocation systems. |

| Solution | Use dynamic memory allocation or reduce block size. | Use memory compaction or paging techniques. |

Question 25: How is memory allocation handled in an OS?

Memory allocation in an operating system is managed through static and dynamic methods. In static memory allocation, memory is assigned at compile time. The size and location of the memory are fixed and cannot be modified during execution. It is typically used for program instructions and global variables.

In dynamic memory allocation, memory is assigned at runtime as processes request it. The operating system allocates memory as needed and reclaims it when no longer in use. It is used for managing heap and stack memory.

The OS uses contiguous allocation for simplicity, where a single continuous block of memory is provided to a process. However, this can lead to external fragmentation. To address this, non-contiguous allocation techniques, such as paging and segmentation, divide memory into smaller blocks and reduce fragmentation. Additionally, virtual memory enables processes to use more memory than physically available by utilizing secondary storage.

To efficiently manage memory, the OS relies on a memory management unit (MMU) to translate virtual addresses into physical addresses and uses data structures like free lists, bitmaps, and page tables to track memory usage.

Question 26: What is demand paging?

Demand paging is a memory management technique. A page is loaded into memory only when a process attempts to access it for the first time. Instead of preloading all pages of a program into memory, the operating system loads pages as needed, saving memory and improving efficiency.

When a program tries to access a page that is not in memory, a page fault occurs. The operating system then retrieves the required page from secondary storage (e.g., a hard drive or SSD) and loads it into memory. Once the page is available, the process resumes execution without needing to know that the page was initially absent.

Question 27: What is the purpose of the translation lookaside buffer (TLB)?

The purpose of the Translation Lookaside Buffer (TLB) is to improve the efficiency of virtual-to-physical address translation in a system using virtual memory. It is a cache that stores recently used page table entries. The processor can quickly retrieve the physical address of a page without repeatedly accessing the page table in the main memory. It reduces memory access time and enhances system performance.

Question 28: What are the differences between stack memory and heap memory?

| Aspect | Stack Memory | Heap Memory |

| Storage | Stores local variables, function calls, and control flow data. | Stores dynamically allocated memory. |

| Allocation | Automatically managed in LIFO order. | Manually managed using malloc()/free() or new/delete. |

| Lifetime | Limited to the scope of the function or block. | Persists until explicitly deallocated. |

| Speed | Faster due to automatic and sequential management. | Slower due to manual management and potential fragmentation. |

| Size | Limited and predefined by the system. | Larger, can grow dynamically, limited by available memory. |

| Error Risk | Prone to stack overflow if the size is exceeded. | Prone to memory leaks and fragmentation if not managed properly. |

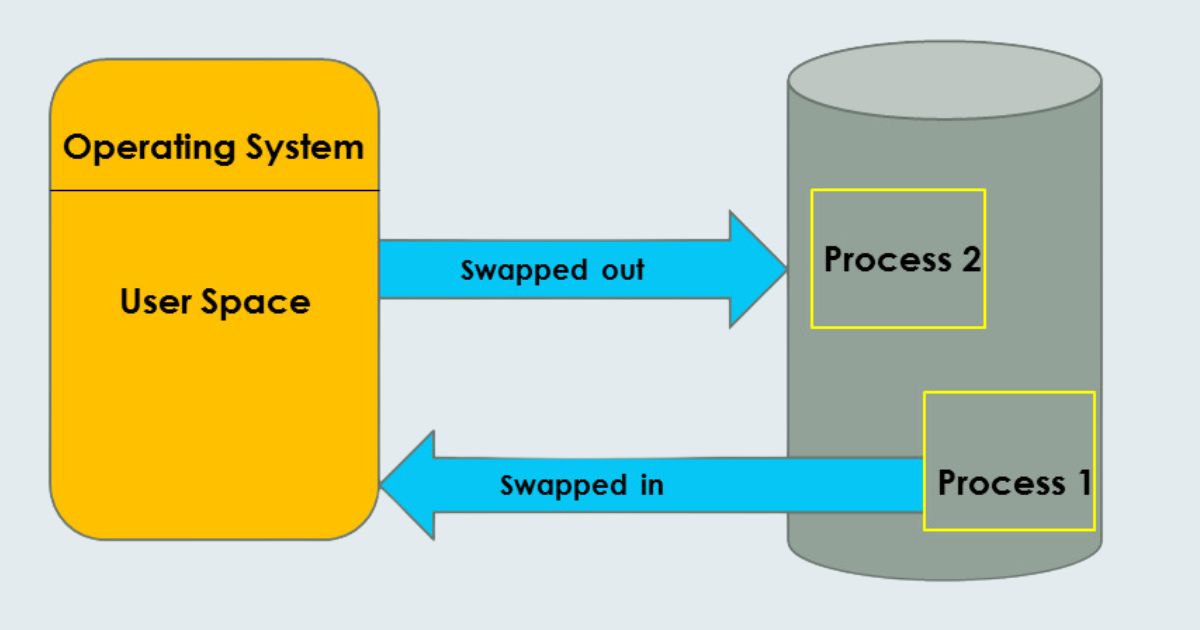

Question 29: What is swapping in operating systems?

Swapping is the process of moving a process or part of a process from the main memory (RAM) to a secondary storage (usually a disk) and vice versa. This mechanism frees up memory for other processes or tasks when the system runs low on RAM. Swapping supports multitasking and managing memory efficiently, but it can introduce performance overhead due to the slower access speed of secondary storage compared to RAM.

Question 30: Explain the concept of memory-mapped files.

Memory-mapped files for the contents of a file to be mapped directly into the virtual address space of a process. So, the process can access and manipulate the file using standard memory operations, such as reads and writes, without requiring explicit I/O system calls. The operating system handles the synchronization between the memory-mapped region and the underlying file. Changes to the memory are reflected in the file and vice versa.

Question 31: What is a file system?

A file system manages, stores, organizes, and retrieves data on storage devices like hard drives, SSDs, or external drives. It defines how files are named, stored, and accessed, as well as how storage space is allocated and managed. Examples include FAT32, NTFS, ext4, and APFS.

Question 32: Explain the difference between NTFS, FAT32, and ext4 file systems.

| Feature | NTFS | FAT32 | ext4 |

| Full Form | New Technology File System | File Allocation Table 32 | Fourth Extended File System |

| Maximum File Size | 16 EB (practical: 16 TB) | 4 GB | 16 TB |

| Maximum Volume Size | 256 TB | 8 TB | 1 EB |

| Compatibility | Supported on Windows, limited on Linux and macOS. | Supported on most OS but outdated. | Native to Linux, limited on Windows. |

| Features | Advanced, supports compression, encryption, and journaling. | Simple, lacks advanced features. | Journaling, better performance, large file and volume support. |

| Performance | Efficient with modern drives and large files. | Slower for large files or drives. | Optimized for Linux systems with high performance. |

| Use Case | Modern Windows systems and large storage needs. | Legacy systems or portable drives for cross-platform use. | Linux systems for performance and reliability. |

Question 33: What is inode in file systems?

An inode in a file system is a data structure that stores metadata about a file or directory. It contains information such as file size, permissions, ownership, timestamps, and pointers to data blocks where the file's content is stored. However, an inode does not store the file name or the actual data; file names are stored in directory entries that reference inodes.

Question 34: How does the OS manage file permissions?

The OS manages file permissions using a combination of access control mechanisms that define which users or processes can read, write, or execute a file. These permissions are typically organized into categories, such as owner, group, and others, with each category having distinct permissions.

The OS relies on metadata stored in the file system to enforce these permissions. When a user or process attempts to access a file, the OS checks the permissions against the request and either grants or denies access accordingly.

Question 35: What is journaling in a file system?

Journaling in a file system is a feature for data integrity by keeping a log (or journal) of changes before they are committed to the main file system. This log records metadata and, optionally, file data changes. If a system crash or power failure occurs, the file system can use the journal to recover and restore consistency by replaying or rolling back incomplete operations. It reduces the risk of data corruption and speeds up recovery compared to non-journaling file systems.

Question 36: What is the difference between a hard link and a soft link?

A hard link is a direct reference to the physical location of a file on the storage device, effectively creating another name for the same file. Deleting the original file does not affect the hard link, as the data remains accessible until all hard links are removed.

A soft link (or symbolic link) is a pointer to the file's pathname, not the file itself. Deleting the original file breaks the soft link, rendering it useless since it depends on the file's location.

Hard links cannot cross file system boundaries, while soft links can.

Question 37: How does the OS handle file locking?

The OS handles file locking to prevent conflicts and make data consistent when multiple processes access a file simultaneously. There are two main types of file locking:

Shared Lock (Read Lock): Multiple processes to read a file concurrently but prevent any from writing to it.

Exclusive Lock (Write Lock): Restricts both reading and writing by other processes, making exclusive access for the locking process.

Question 38: Explain the concept of disk scheduling.

Disk scheduling is the process of determining the order in which disk I/O requests are serviced. Its purpose is to optimize disk access time by reducing seek time, rotational latency, and overall response time for data retrieval. The OS prioritizes requests based on specific algorithms to efficiently manage the movement of the disk's read/write head.

Question 39: What is the difference between logical and physical file systems?

| Aspect | Logical File System | Physical File System |

| Function | Manages file naming, permissions, and operations. | Handles the storage and retrieval of data blocks. |

| Abstraction Level | High-level, user-facing abstraction. | Low-level, hardware-facing implementation. |

| Key Responsibilities | File organization, access control, and metadata. | Block allocation, device driver interaction. |

| Focus | User and application file operations. | Physical layout and data management on storage. |

Question 40: What is the purpose of a directory structure?

The purpose of a directory structure is to organize and manage files on a storage system efficiently. It has a hierarchical framework to locate, access, and manage files systematically. A directory structure also supports file organization, grouping related files, managing permissions, searching, and navigation.

Question 41: What is an I/O subsystem?

An I/O subsystem is responsible for managing all input and output operations. It acts as an intermediary between the hardware devices (such as keyboards, disks, printers, and network devices) and the operating system.

The I/O subsystem makes data transfer between the hardware and the system efficiently and reliably. It uses mechanisms like device drivers, buffering, caching, and I/O scheduling. It also handles device-specific communication protocols, manages interrupts, and deals with errors during data transmission.

Question 42: Explain the difference between blocking and non-blocking I/O.

| Aspect | Blocking I/O | Non-blocking I/O |

| Behavior | Process waits until the I/O operation completes. | Process continues execution without waiting. |

| Control | Control is returned after I/O completion. | Control is returned immediately after the call. |

| Concurrency | No other tasks are performed during I/O. | Other tasks can be performed during I/O. |

| Use Case | Simple operations where waiting is acceptable. | High-performance systems requiring multitasking. |

| Implementation | Easier to implement, but less efficient. | More complex to implement, but highly efficient. |

Question 43: What is the role of device drivers in an OS?

Device drivers are specialized software modules in an operating system that facilitate communication between the OS and hardware devices. The roles of device drivers are:

Hardware Abstraction: offers a standard interface to the OS, allowing it to interact with hardware without needing to know the device's specific details.

Command Translation: Convert OS requests into device-specific operations.

Device Control: control the operation of hardware, such as starting or stopping devices.

Error Handling: Detect and report hardware errors to the OS.

Interrupt Handling: Respond to hardware-generated interrupts for tasks like data transfer completion.

Question 44: What is DMA (Direct Memory Access)?

DMA (Direct Memory Access) is a feature of hardware devices that transfer data directly to or from the system's memory without involving the CPU.

It offloads data transfer tasks from the CPU, accordingly, it can focus on other operations while the DMA controller handles the transfer. DMA is commonly used in scenarios like disk I/O, network data transfer, and audio processing.

Question 45: How does the OS manage device queues?

The OS manages device queues by maintaining a queue for each device to handle I/O requests in an orderly manner. Key steps are:

- Step 1 - Queue Maintenance: Requests are added to the device queue as they arrive.

- Step 2 - Scheduling: The OS uses scheduling algorithms (e.g., FCFS, SSTF) to decide the order in which requests are processed.

- Step 3 - Concurrency Control: Make sure that simultaneous access to the queue is managed properly.

- Step 4 - Error Handling: Removes failed or invalid requests from the queue.

- Step 5 - Prioritization: Some OSes allow prioritizing certain requests to optimize performance.

Question 46: Explain the concept of spooling.

In Spooling (Simultaneous Peripheral Operations On-Line), data is temporarily stored in a buffer or spool to manage and coordinate input/output operations.

Slower devices, like printers, process data at their own pace while the system continues executing other tasks. Spooling is commonly used for managing print jobs, where multiple jobs are queued in a spool before being sent to the printer.

Question 47: What are I/O scheduling algorithms?

I/O scheduling algorithms determine the order in which input/output requests are processed by the operating system to optimize performance. Common I/O scheduling algorithms include:

First-Come, First-Served (FCFS): Processes requests in the order they arrive.

Shortest Seek Time First (SSTF): Select the request closest to the current head position.

SCAN (Elevator): Moves the disk head in one direction, servicing requests, then reverses.

C-SCAN (Circular SCAN): Similar to SCAN but resets to the beginning after reaching the end.

LOOK and C-LOOK: Variants of SCAN and C-SCAN, stopping at the last request instead of the disk's end.

Question 48: What is the purpose of buffering in I/O?

The purpose of buffering in I/O is to temporarily store data during input or output operations, creating smooth communication between devices with different speeds or processing capacities. It bridges the gap between fast components, like the CPU and memory, and slower devices, such as disks or network interfaces. Thanks to it, the system continues operating efficiently without waiting for slower devices to complete their tasks.

Buffering also helps in handling bursty or inconsistent workloads by accumulating data during high-activity periods and processing it gradually. Additionally, buffering supports asynchronous processing, where the CPU can continue executing tasks without being blocked by I/O operations.

Question 49: What is the difference between multiprocessing and multitasking?

| Aspect | Multiprocessing | Multitasking |

| Definition | Utilizes two or more processors (CPUs) to execute tasks simultaneously. | Allows multiple tasks (processes) to run concurrently on a single CPU. |

| Parallelism | Achieves true parallelism as multiple CPUs execute tasks simultaneously. | Simulates parallelism by time-slicing and switching tasks on a single CPU. |

| Efficiency | Increases computational power and throughput. | Improves CPU utilization through efficient task management. |

| Hardware Dependency | Requires multiple CPUs or cores. | Can operate on a single CPU. |

| Usage | Ideal for high-performance systems like servers and computational tasks. | Suitable for general-purpose systems handling multiple applications at once. |

Question 50: What are synchronous and asynchronous I/O operations?

Synchronous I/O requires a process to wait for the I/O operation to complete before it can continue execution. The process is blocked during this time for the purpose of data consistency and simplicity in programming but potentially leads to inefficiency if the device is slow.

In asynchronous I/O, the process continues executing without waiting for the I/O operation to finish. The OS notifies the process or handles the I/O completion in the background, improving system performance and multitasking capabilities. Though it may require more complex programming to manage.

Question 51: What is the difference between distributed and network operating systems?

| Aspect | Distributed Operating System | Network Operating System |

| Definition | Manages multiple interconnected computers as a single system. | Facilitates resource sharing and communication across systems. |

| Integration | Provides tight integration, making the system appear unified. | Maintains independence of each system in the network. |

| Transparency | Offers transparency in resource sharing and process management. | No transparency; users manage resources explicitly. |

| Communication | Seamless communication between nodes with shared processes. | Communication via standard networking protocols. |

| Resource Sharing | Automatic and coordinated resource sharing. | Resource sharing requires manual setup or explicit access. |

| Fault Tolerance | Includes fault tolerance mechanisms across the system. | Limited fault tolerance; relies on individual systems. |

| Use Case | Used in tightly coupled systems, like clusters or grids. | Used in loosely connected systems, like LANs or WANs. |

Conclusion

So, you are done with 51 Operating System Interview Questions and Detailed Answers. We hope this guide has been insightful and helps you succeed in your interview journey. Good luck!

Continuous learning and hands-on practice with real-world scenarios are key to staying ahead in this field. To achieve it, you can enroll in Skilltrans courses. We have a variety of useful courses that will support you in your future.

Meet Hoang Duyen, an experienced SEO Specialist with a proven track record in driving organic growth and boosting online visibility. She has honed her skills in keyword research, on-page optimization, and technical SEO. Her expertise lies in crafting data-driven strategies that not only improve search engine rankings but also deliver tangible results for businesses.