Top 52 Kubernetes Interview Questions and Detailed Answers

Kubernetes has revolutionized how applications are deployed, managed, and scaled across distributed environments. As more companies embrace Kubernetes, demand for professionals skilled in this technology has surged.

To have a brilliant career, interviews are always a mandatory start. Don't worry, Skilltrans has helped you list and answer common interview questions. Learn and memorize in your own way to solve questions professionally and in the most confident way.

Here are 52 must-ask Kubernetes interview questions with detailed answers. Hope you are ready for it!

Question 1: What is Kubernetes, and why is it used?

Kubernetes is an open-source container orchestration platform. It automates the deployment, scaling, and management of containerized applications.

Developed by Google and now maintained by the Cloud Native Computing Foundation (CNCF), Kubernetes supports organizations in managing applications in a distributed environment. These applications are easily scalable, resilient, and highly available.

Kubernetes is used because it simplifies managing containerized applications by abstracting the underlying infrastructure and automating common operational tasks. So, key reasons for using Kubernetes include:

Automated Scaling: Kubernetes can automatically scale applications up or down based on demand. Organizations can efficiently manage resources and respond to traffic spikes or lulls.

High Availability and Resilience: Kubernetes monitors application health and can automatically restart or replace failed containers, minimizing downtime.

Portability: As a platform-agnostic solution, Kubernetes can run across different cloud providers and on-premises infrastructure.

Efficient Resource Management: Kubernetes optimizes hardware usage, allocating resources as needed and reducing overhead costs.

Simplified Application Management: Through features like rolling updates, rollbacks, and version control, Kubernetes enables teams to manage application updates with minimal disruptions.

Question 2: Explain the architecture of Kubernetes.

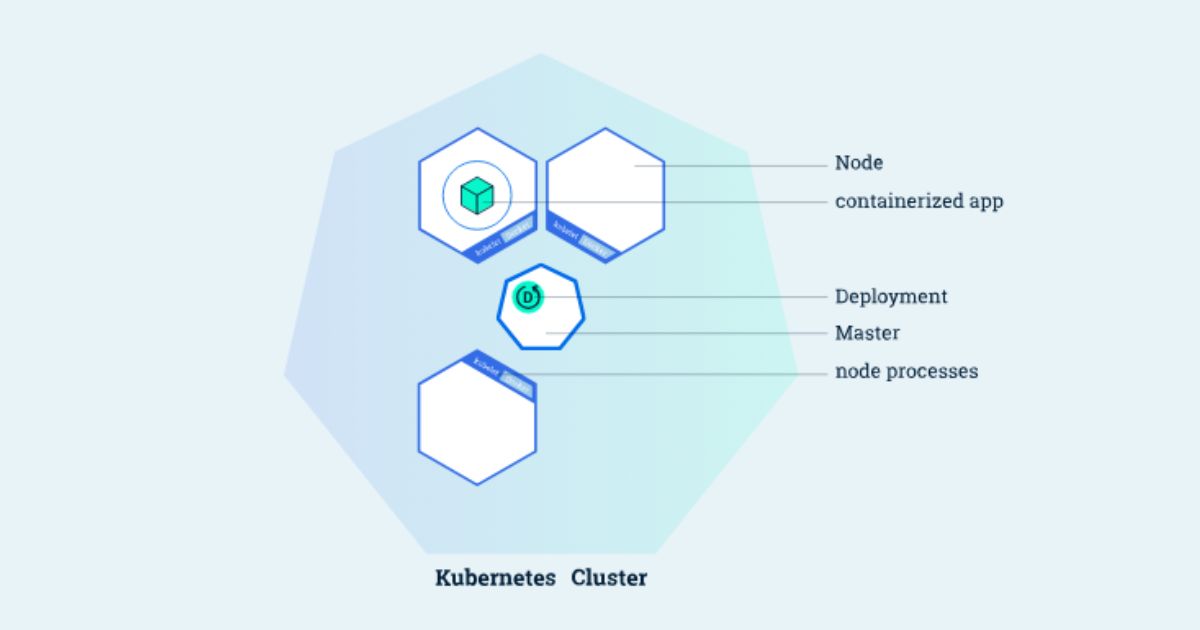

Kubernetes has a master-worker architecture composed of Control Plane components (Master Node) and Worker Nodes.

Control Plane (Master Node)

- kube-apiserver: Acts as the main entry point, exposing the Kubernetes API. It processes and validates requests, updating cluster state in etcd.

- etcd: A key-value store for cluster data, storing all configurations and state information.

- kube-scheduler: Assigns workloads (pods) to nodes based on resource availability and policies.

- kube-controller-manager: Manages various controllers, making sure the cluster meets desired states (e.g., replicating pods, maintaining node health).

- cloud-controller-manager (if using cloud): Integrates Kubernetes with cloud provider services (e.g., storage, load balancers).

Worker Nodes

- kubelet: Runs on each worker node, executing pod specs, monitoring health, and communicating with the Control Plane.

- kube-proxy: Manages network connectivity and routing for pods, load balancing, service discovery.

- Container Runtime: Executes containers on each node (e.g., Docker or containerd).

Question 3: Define a Kubernetes cluster.

A Kubernetes cluster is a set of nodes (physical or virtual machines) that run containerized applications managed by Kubernetes. It consists of a control plane and worker nodes. The control plane is responsible for maintaining the desired state of the cluster, such as which applications are running and their container images. The worker nodes run the actual applications and workloads.

Kubernetes automates tasks like deployment, scaling, and management of containerized applications. It benefits from high availability to efficient resource utilization across the cluster.

Question 4: What are the Kubernetes cluster’s key components?

A Kubernetes cluster is a set of nodes that run containerized applications managed by Kubernetes. It has two main parts: the Control Plane and Worker Nodes.

Control Plane: Manages the cluster’s overall state, workload scheduling, and control. It includes:

- kube-apiserver: The entry point for all API interactions, handling requests, and managing cluster data.

- etcd: A distributed key-value store for storing cluster configuration and state.

- kube-scheduler: Assigns pods to nodes based on available resources.

- kube-controller-manager: Runs controllers (like Node, ReplicaSet) with the purpose that the cluster meets desired states.

- cloud-controller-manager: Integrates with cloud services for handling storage, networking, and node management (optional for cloud-hosted clusters).

Worker Nodes: Run containerized applications (pods) and provide the computing power. Each node includes:

- kubelet: Containers are running as per pod specifications, and communicates with the Control Plane.

- kube-proxy: Manages networking within the cluster, enabling communication between services.

- Container Runtime: Executes the containers (e.g., Docker, containerd).

Question 5: What are nodes in Kubernetes?

Nodes in Kubernetes are the worker machines (either physical or virtual) that run the containerized applications and workloads. Each node contains the services necessary to run pods, which are the smallest deployable units in Kubernetes. Nodes are managed by the control plane, which schedules tasks and manages the overall state of the cluster. A node typically runs a container runtime (such as Docker or containerd), kubelet (an agent that communicates with the control plane and manages the node), and kube-proxy (a network proxy that maintains network rules and facilitates communication).

Question 6: What are pods?

Pods are the smallest and most basic deployable units in Kubernetes. A pod encapsulates one or more containers that share the same network namespace, storage, and lifecycle. Containers within a pod can communicate with each other using localhost and share resources like storage volumes.

Question 7: How do pods work?

Pods are designed to run a single instance of a given application or service. They can host tightly coupled containers that need to work together, such as a main application container and a helper container. Kubernetes schedules and manages pods, so they are distributed across nodes in the cluster to maintain application reliability and scalability. Pods can be created, scaled, and managed using controllers such as Deployments, ReplicaSets, and StatefulSets to achieve desired state management.

Question 8: Describe namespaces in Kubernetes.

Namespaces in Kubernetes create isolated environments within a single Kubernetes cluster. They organize resources by dividing the cluster into separate, logical partitions. This is particularly useful for environments that need multi-tenancy, where different teams or projects share the same cluster but require separation in terms of access, resource allocation, and management.

With Namespaces, you can:

Manage resources such as pods, services, and deployments independently within each namespace.

Apply different policies and configurations to different parts of the cluster.

Control access using Role-Based Access Control (RBAC) to restrict or permit specific users or services to interact only with certain namespaces.

By default, Kubernetes comes with namespaces like default, kube-system, and kube-public, but users can create custom namespaces as needed for better organization and management.

Question 9: Explain the purpose of kube-apiserver.

The kube-apiserver is a core component of the Kubernetes control plane. The purpose of the kube-apiserver are:

Handling API Requests: It processes API calls from users, command-line tools (like kubectl), and other components within the control plane. These requests like deploying applications, scaling workloads, and managing resources.

Validation and Authentication: The kube-apiserver authenticates incoming requests and validates them before processing.

Communication Hub: It acts as a communication hub between internal components, including the etcd database (which stores the cluster state) and controllers that manage specific aspects of the cluster.

Cluster State Management: By interacting with etcd, it makes sure that any changes to the cluster configuration are recorded and maintained accurately.

Question 10: What is kube-scheduler?

The kube-scheduler is the component in Kubernetes that is responsible for assigning newly created, unscheduled pods to nodes in the cluster. It continuously monitors for pods that need scheduling and evaluates available nodes to find the optimal match based on factors like resource requirements (CPU, memory), constraints, and scheduling policies such as affinity, anti-affinity, and taints/tolerations. Once a suitable node is selected, the kube-scheduler binds the pod to that node, enabling the kubelet to manage its lifecycle on the chosen node.

Question 11: How does kube-scheduler function?

The kube-scheduler functions by continuously monitoring for newly created pods that do not have an assigned node. It evaluates each available node in the cluster to determine which one best meets the pod's scheduling requirements. The scheduler considers factors such as resource availability (CPU, memory), affinity and anti-affinity rules, taints and tolerations, and other constraints or policies defined by the user. Once the kube-scheduler identifies the optimal node, it binds the pod to that node, effectively assigning the pod for execution.

Question 12: What is the role of kube-controller-manager?

The kube-controller-manager runs controller processes that regulate the state of the cluster. Its main roles are:

Node Controller: Monitors the status of nodes and manages node failure detection.

Replication Controller: Makes the correct number of pod replicas running as specified.

Endpoints Controller: Maintains endpoint objects that associate services with pods.

Service Account and Token Controllers: Manages default accounts and tokens for access control.

It runs multiple controllers as separate processes within a single binary, streamlining the management and synchronization of resources within the cluster.

Question 13: Describe etcd and its importance in Kubernetes.

etcd is a distributed key-value store. It acts as the primary data storage for the cluster. It stores configuration data, metadata, and the current state of the entire cluster. Kubernetes uses etcd to keep track of resource information, such as pods, nodes, ConfigMaps, and secrets.

The API server interacts with etcd to read and write the desired state and the actual state of resources in the cluster.

Question 14: What is a kubelet?

A kubelet continuously monitors whether the containers described in Pod specifications are running and healthy. The kubelet communicates with the API server to receive Pod specifications, check container states, and report back on the status of the node and its pods.

The kubelet uses container runtimes (e.g., Docker, containerd) to start or stop containers, monitor resource usage, and restart failed containers if needed, aligning with the desired state defined in the cluster.

Question 15: What does kubelet do?

A kubelet performs several tasks on a Kubernetes node:

Registers the Node: It registers the node with the Kubernetes API server to node become part of the cluster.

Pod Management: The kubelet continuously monitors Pods assigned to the node. So, each container matches the Pod specifications received from the API server.

Container Lifecycle Management: It starts, stops, and restarts containers using a container runtime (like Docker or containerd).

Health Checks and Monitoring: The kubelet performs liveness and readiness probes on containers, reporting back to the control plane to update the pod's status based on these health checks.

Resource Reporting: It tracks resource usage and reports node and pod status (e.g., CPU and memory usage) back to the API server.

Question 16: How does kubectl work?

kubectl is the command-line tool for interacting with a Kubernetes cluster. It communicates with the Kubernetes API server to perform various actions - creating, updating, and deleting resources. Here’s how it works:

API Requests: When you run a kubectl command, it sends HTTP requests to the API server, following REST principles. For instance, commands like kubectl get pods or kubectl apply -f <file>.yaml trigger specific API calls.

Configuration and Authentication: kubectl uses a configuration file, usually located at ~/.kube/config, to authenticate and specify which cluster to connect to. This config file includes details like server URLs, user credentials, and context settings.

Serialization and Deserialization: kubectl processes data in YAML or JSON formats, converting it to the necessary format for the API server, and then converts responses back to readable output for the user.

Directing to Appropriate Resources: Depending on the command, kubectl interacts with various Kubernetes resources, such as pods, nodes, deployments, and services, instructing the API server to make changes accordingly.

Question 17: What is kubectl used for?

By kubectl, users easily control Kubernetes resources by executing commands. Some of the functions of kubectl:

Resource Management: Create, update, delete, view Kubernetes resources like Pods, Services, Deployments, ConfigMaps.

Cluster Information: Retrieve information about the cluster, nodes, and their status.

Troubleshooting: Inspect logs, debug pods, check the status of resources to diagnose issues.

Scaling and Rolling Updates: Scale applications, manage deployments, perform rolling updates.

Configuration Management: Apply configurations from YAML or JSON files to set up or update resources according to desired specifications.

Question 18: Define a ReplicaSet in Kubernetes.

ReplicaSet is a resource that assures a specified number of identical pod replicas are always running. It monitors the number of active pods that match its label selector and adjusts the count to match the desired state. If the number of pods falls below the specified replica count, the ReplicaSet creates new pods; if there are more than the desired number, it terminates excess pods.

Question 19: What is a DaemonSet?

DaemonSet maintains a specific pod is running on every node (or a subset of nodes) in the cluster. It deploys a single instance of the specified pod on each node, which is useful for tasks that require a uniform deployment across nodes.

Question 20: Explain the concept of a StatefulSet.

StatefulSet manages stateful applications that require persistent identity, ordered deployment, and stable storage. StatefulSets provides each pod with a unique, stable identity, persistent storage, creating continuity and consistency across restarts and rescheduling.

Question 21: How do you deploy an application in Kubernetes?

To deploy an application in Kubernetes, follow these steps:

Define Configuration: Create a YAML configuration file that specifies the application's desired state, typically using resources like Deployments, Services, and ConfigMaps. The Deployment resource is often used to manage the application's pod replicas and desired updates.

Create Resources: Use the kubectl apply command to deploy the configuration file to the cluster.

Expose the Application: Set up a Service to make the application accessible within or outside the cluster. For example, a LoadBalancer Service exposes the application externally, while a ClusterIP or NodePort Service exposes it internally.

Verify Deployment: Use commands like kubectl get pods, kubectl get svc, and kubectl describe <resource> to check the status of the deployed resources and ensure that pods are running, accessible.

Monitor and Scale: Monitor the application’s performance using kubectl commands or a monitoring tool and scale as needed by updating the Deployment's replica count.

Question 22: Explain the role of a Deployment in Kubernetes.

Deployment defines, maintains a desired state for pods and ReplicaSets. The roles of a Deployment are:

Defining Desired State: A Deployment specifies the desired number of pod replicas and the pod template.

Rolling Updates: Seamlessly update pods to a new version without downtime by gradually replacing old pods with new ones.

Rollback: Deployments support rollbacks. It returns to previous configurations if an update fails or has issues.

Scaling: Deployments can scale the number of replicas up or down based on resource needs.

Question 23: What is the purpose of a Service in Kubernetes?

Service is an abstraction that defines a logical set of Pods and a policy by which to access them. The primary purposes of a Service are:

Expose Pods: Services expose a set of Pods to other parts of the application or external users. They create a consistent, discoverable endpoint that remains the same even as the actual Pods behind it may change.

Load Balancing: Services can automatically load-balance network traffic across multiple Pods.

Service Discovery: Kubernetes provides built-in service discovery to let other components find and communicate with the Service.

Question 24: Describe Horizontal Pod Autoscaling (HPA).

Horizontal Pod Autoscaling (HPA) automatically adjusts the number of Pods in a deployment based on observed CPU utilization or other metrics. It increases or decreases the number of Pods to maintain performance and resource efficiency in response to changing workloads.

Question 25: How can you perform a rolling update in Kubernetes?

You can perform a rolling update to update a deployment or daemon set without downtime. To perform a rolling update:

Use kubectl apply: Update the deployment YAML file with the new configuration and apply it

Use kubectl set image: Specify the new container image for the deployment.

Kubernetes will then update Pods one by one.

Question 26: What is the difference between rolling updates and recreating deployments?

| Feature | Rolling Updates | Recreate Deployment |

| Update Strategy | Gradually replaces old Pods with new ones in batches. | Terminates all existing Pods before creating new ones. |

| Downtime | Zero downtime; some old Pods remain running until new ones are ready. | Results in downtime; no Pods are available until new ones are created. |

| Use Case | For applications requiring continuous availability during updates. | For applications where downtime is acceptable or an immediate restart is needed. |

| Traffic Handling | Traffic is handled by both old and new Pods during the update. | Traffic is interrupted until new Pods are fully deployed. |

Question 27: How do you scale applications in Kubernetes?

Applications can be scaled by adjusting the number of replicas for a deployment, replication controller, or stateful set. There are two main ways to scale applications:

Manual Scaling: Use kubectl scale to manually set the desired number of replicas

Automatic Scaling: Use Horizontal Pod Autoscaler (HPA) to dynamically adjust the number of replicas based on resource usage (e.g., CPU or custom metrics).

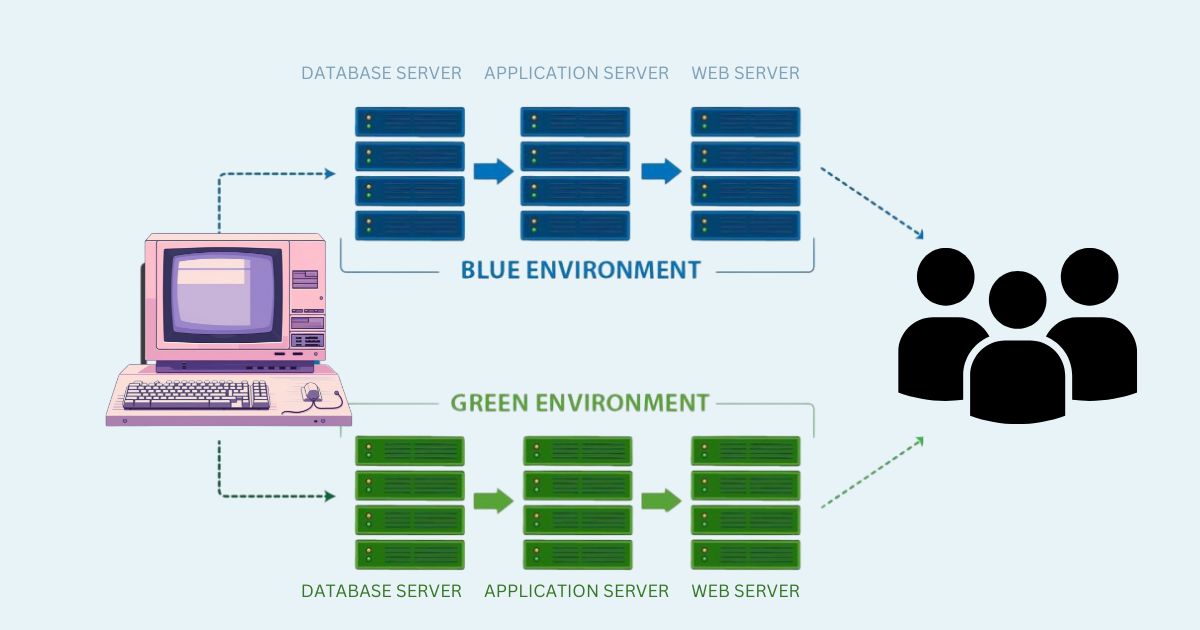

Question 28: What is a Blue-Green Deployment?

A Blue-Green Deployment is a deployment strategy that minimizes downtime or risk by running two identical environments, called "blue" and "green." The current (blue) environment serves production traffic, while the new (green) environment is updated and tested. Once verified, traffic is switched from the blue environment to the green environment. It is a smooth transition without disrupting users.

Question 30: Does Kubernetes support Blue-Green Deployment?

Kubernetes supports Blue-Green Deployments. Although, it doesn’t provide a native, built-in feature specifically for it. Instead, Blue-Green Deployments can be implemented using:

Multiple Deployments or Services: Create separate deployments for the blue and green environments, then switch the Service to point to the new version.

Traffic Shifting: Use an Ingress controller or a service mesh (e.g., Istio) to shift traffic between blue and green environments gradually.

Question 31: Explain Canary Deployments in Kubernetes.

A Canary Deployment in Kubernetes is a strategy that releases a new version of an application to a small subset of users before rolling it out to the entire user base.

Overview of Canary Deployments:

Incremental Rollout: Only a small percentage of traffic is initially directed to the new version. If the new version performs well, the traffic percentage is gradually increased until it fully replaces the old version.

Traffic Routing: This can be managed using services, Ingress controllers, or service meshes (like Istio) that fine-grained traffic routing to control how much traffic goes to each version.

Rollback Capability: If issues are detected during the canary phase, you can easily roll back to the previous stable version.

Question 32: How does Kubernetes handle scaling and load balancing?

Scaling in Kubernetes:

Horizontal Pod Autoscaler (HPA): Kubernetes can automatically scale the number of Pods up or down based on CPU, memory, or custom metrics like request count.

Manual Scaling: Kubernetes also supports manual scaling, where you can specify the number of replicas for a deployment.

Cluster Autoscaler: For cluster-level scaling, Kubernetes can add or remove nodes based on workload demand.

Question 33: What are the different types of Services in Kubernetes?

Kubernetes has various Service types to control how Pods are accessed, whether internally within the cluster or externally by outside users.

ClusterIP

The ClusterIP Service type is the default option in Kubernetes, designed to expose services only within the cluster. This Service type allocates a stable internal IP address. Since it does not expose the Service outside of the cluster, ClusterIP is ideal for internal microservices, databases, and backend applications that only need to communicate with other internal components.

NodePort

The NodePort Service type exposes the Service on a specific port on each node’s IP. It is accessible from outside the cluster via <NodeIP>:<NodePort>. NodePort essentially opens a network port on each node and routes traffic to the specified Service. This type is useful for straightforward external access to the cluster, especially in testing and development, though it lacks advanced load-balancing features.

LoadBalancer

For production environments requiring stable and scalable external access, the LoadBalancer Service type is commonly used. Supported primarily by cloud providers, LoadBalancer automatically provisions an external load balancer that routes traffic to the Pods. This Service type creates a public IP and evenly distributes incoming requests, making it ideal for applications like web servers and public APIs.

ExternalName

With ExternalName, Pods can access resources outside the Kubernetes cluster, like legacy systems or third-party APIs, without needing direct internet access. ExternalName simplifies integration with external services and can be used in scenarios where a Kubernetes Service needs to reference non-cluster resources.

Question 34: How does ClusterIP work in Kubernetes?

How ClusterIP Works:

Stable Internal IP: When a ClusterIP Service is created, Kubernetes assigns it a unique, stable internal IP address. This IP address is only accessible within the cluster; external requests cannot reach the Service directly.

Service Discovery: Kubernetes automatically registers the ClusterIP with DNS within the cluster. This registration for other Pods to locate the Service by name. So, it is easy to communicate with other components in the cluster without worrying about specific Pod IP addresses, which can change.

Load Balancing: When a request is sent to the ClusterIP Service, Kubernetes performs internal load balancing, distributing the traffic across all healthy Pods that match the Service's label selector.

Internal-Only Access: Since ClusterIP is designed for internal access only, it is commonly used for backend services, microservices, or databases that only need to be accessed by other applications or services within the cluster.

Question 35: Explain NodePort.

The NodePort Service type exposes an application running on a cluster to external traffic by opening a specific port on each node’s IP address. It results in users accessing the service using <NodeIP>:<NodePort>, it is accessible outside the cluster without the need for an external load balancer.

Question 36: Describe NodePort’s use case.

NodePort is commonly used in small-scale deployments, development, or testing environments. These are the places where direct external access is needed but advanced load balancing and failover features are not a priority.

NodePort is also useful as a foundational setup for external load balancing; for instance, cloud providers often use NodePort to configure LoadBalancer Services by routing traffic from a cloud-managed load balancer to the NodePort.

However, NodePort is limited to production-scale applications due to its lack of advanced traffic management. It is best suited for testing environments, small clusters, or as a component in more complex networking setups.

Question 37: How does Ingress work in Kubernetes?

Ingress manages external HTTP and HTTPS access to services within a cluster. How Ingress Works:

Ingress Resource: An Ingress resource is a configuration file that defines routing rules, such as URL path-based or host-based routing. You can direct traffic to different services within the cluster. For example, you can route traffic, for example.com/api to one service and example.com/app to another.

Ingress Controller: For Ingress to work, you need an Ingress Controller running in the cluster, which acts as a reverse proxy. The Ingress Controller reads and implements the rules defined in the Ingress resource. Popular Ingress Controllers include NGINX, HAProxy, and Traefik. The controller dynamically configures routing to match the rules, balancing the traffic among the specified services.

SSL Termination: Ingress supports SSL termination. Rely on it, you easily secure external traffic using HTTPS. With SSL termination, the Ingress Controller handles decryption, reducing the load on individual services. This setup is ideal for web applications needing secure access.

Load Balancing and Path-Based Routing: Ingress enables advanced traffic routing and load balancing by routing requests based on paths (e.g., /API to one service, /app to another) or hostnames. This flexibility is valuable for multi-service applications or microservices needing different routes without exposing each service individually.

Question 38: What is an Ingress Controller?

Ingress Controller is a Kubernetes component that controls external access to services within a cluster, typically over HTTP and HTTPS. It implements the rules defined by Ingress resources, which specify routing configurations such as host-based or path-based rules for directing incoming traffic to specific services.

Question 39: Why is Ingress Controller important?

The Ingress Controller is important because it makes efficient and centralized management of external traffic.

Route requests to multiple services from a single IP address.

Implement SSL/TLS termination for secure connections.

Define custom routing, load balancing, and authentication for incoming traffic.

Question 40: Describe the purpose of kube-proxy.

The kube-proxy is a network component in Kubernetes that runs on each node and manages network rules to allow communication between services and pods. Kube-proxy's primary purpose is to maintain and route network traffic to the appropriate pods, implementing Kubernetes service abstraction by managing IP and port mapping.

Handling IP Addressing: Assigning virtual IP addresses to services, they route correctly to the corresponding pod endpoints.

Load Balancing: Distributing traffic across multiple pod instances for a service.

Managing Network Rules: Applying iptables or IPVS rules to route traffic within the cluster based on service specifications.

Question 41: Explain Network Policies in Kubernetes.

Network Policies are used to control network traffic between pods and other network endpoints within a cluster. They define rules specifying how pods are allowed to communicate with each other and with external endpoints, based on factors like namespaces, pod labels, and traffic type (ingress or egress).

The main functions of Network Policies are:

Restricting Access: Limiting communication to only necessary pods, reducing exposure, and enhancing security.

Traffic Direction Control: Enforcing rules for ingress (incoming) and egress (outgoing) traffic to and from specific pods.

Question 42: What is DNS in Kubernetes?

DNS (Domain Name System) is a way to resolve the names of services and pods to their corresponding IP addresses within the cluster. Kubernetes automatically deploys a DNS server, typically CoreDNS, to handle name resolution.

Question 43: How is DNS used?

DNS in Kubernetes is used to:

Facilitate Service Discovery: Services within the cluster are assigned DNS names. Pods communicate with them via service names rather than IP addresses.

Simplify Communication: Pods can access other services using DNS names like service-name.namespace.svc.cluster.local, improving readability and manageability.

Question 44: How do ClusterRoles differ from Roles in Kubernetes?

| Aspect | Role | ClusterRole |

| Scope | Namespace-scoped | Cluster-scoped |

| Applicable To | Resources within a specific namespace | Resources across all namespaces or cluster-wide resources |

| Use Case | Limits access within one namespace | Grants access to resources cluster-wide or across multiple namespaces |

| Example Resources | Pods, Services, ConfigMaps within a namespace | Nodes, Persistent Volumes, Cluster-wide resources |

Question 45: What is the difference between a Job and a CronJob?

a Job is like a one-off task, while a CronJob is a scheduler that creates Jobs at specific intervals.

| Aspect | Job | CronJob |

| Purpose | Executes a task once and ensures completion | Executes tasks on a scheduled, recurring basis |

| Scheduling | Runs immediately upon creation | Runs at specified times based on a cron schedule |

| Use Case | One-time tasks, such as data processing or batch jobs | Recurring tasks, like backups, report generation, or maintenance tasks |

| Example | Data migration script that runs once | Daily backup job that runs every 24 hours |

Question 46: How do Secrets differ from ConfigMaps in Kubernetes?

Both Secrets and ConfigMaps are used to store and inject configuration data into pods. However, they serve distinct purposes based on the sensitivity of the information they hold.

| Aspect | Secrets | ConfigMaps |

| Purpose | Stores sensitive data, such as passwords or tokens | Stores non-sensitive configuration data |

| Data Encoding | Base64-encoded for added security | Plaintext data |

| Use Case | Securing confidential information | Managing environment variables, configuration files, etc. |

| Access Control | More restricted access with stricter permissions | Less restrictive access |

Question 47: Explain Persistent Volumes (PVs) in Kubernetes.

Persistent Volumes (PVs) are storage resources provisioned independently of any specific pod. It is a way for data to persist beyond the lifecycle of individual containers. PVs are defined at the cluster level and offer durable storage that can be mounted by pods. Data can survive pod restarts and failures.

Key characteristics of PVs:

Lifespan: PVs exist independently of the pods that use them. This is beneficial to data retention.

Provisioning: They can be statically created by an administrator or dynamically provisioned based on storage classes.

Usage: Pods can request storage through Persistent Volume Claims (PVCs), which bind to available PVs that meet specified requirements.

Question 48: Describe Persistent Volume Claims (PVCs).

Persistent Volume Claims (PVCs) are requests made by pods to access storage from Persistent Volumes (PVs). PVCs specify the storage requirements, such as size and access modes (e.g., ReadWriteOnce), that a pod needs.

When a PVC is created, Kubernetes searches for a matching PV that meets the claim’s requirements and binds them together.

Key aspects of PVCs:

Storage Request: Defines the amount and type of storage required.

Dynamic Provisioning: PVCs can trigger the automatic provisioning of PVs if a suitable one isn’t available, given that a storage class is defined.

Access Control: Allows pods to securely access persistent storage without direct interaction with the underlying PV.

Question 49: How is storage provisioned dynamically in Kubernetes?

Storage is dynamically provisioned using Persistent Volumes (PVs) and Persistent Volume Claims (PVCs) with the help of Storage Classes.

Storage Class: A StorageClass defines the type of storage and parameters for dynamic provisioning, such as provisioner type (e.g., AWS EBS, GCE Persistent Disk, NFS). It also specifies details like the storage provider and additional settings such as replication or encryption.

Provisioning: When a PersistentVolumeClaim (PVC) is created by an application to request storage, Kubernetes uses the associated StorageClass to automatically create a PersistentVolume (PV) that satisfies the claim's requirements. This process is managed by a storage provisioner (either cloud-specific or custom provisioners), which handles the backend infrastructure to create the volume in real-time.

Binding: After provisioning, the PersistentVolume is bound to the PersistentVolumeClaim. This serves a purpose the storage ready for use by the pod that requested it.

Question 50: What are StorageClasses?

StorageClass is an abstraction layer that defines different types of storage "classes" (e.g., SSD, HDD, cloud storage) that can be dynamically provisioned. Administrators can specify characteristics and requirements for storage. It is possible to offer varied storage options to applications without pre-provisioning.

Question 51: Explain the purpose of ConfigMaps.

ConfigMap is an API object used to store non-sensitive configuration data in key-value pairs. Its primary purpose is to externalize configuration information, permitting applications to be decoupled from configuration details.

Purpose of ConfigMaps:

Separation of Configuration and Code: ConfigMaps separation of configuration from application code. By using ConfigMaps, you avoid hardcoding configuration values directly into containers. It is a solution that easily manages and updates configurations independently of application deployments.

Flexibility and Reusability: ConfigMaps can be easily modified and reused across different environments (e.g., development, testing, production). Teams will adapt configurations to different deployment environments without changing application code.

Dynamic Configuration Management: Applications can reference ConfigMaps for configuration data at runtime. In some cases, configuration changes can be applied immediately without restarting pods, although this depends on how the application uses the ConfigMap data.

Ease of Use in Environment Variables and Volume Mounts: ConfigMap data can be injected into pods as environment variables or as files in a volume.

Question 52: What is VolumeMount?

VolumeMount is a specification within a container that defines how a volume is mounted into the container's file system. It specifies the path inside the container where the volume should be attached, allowing the container to read and write data to the volume.

Conclusion

Kubernetes is indeed a dynamic platform that continuously evolves to meet the demands of cloud-native development. Keeping up with its latest features and enhancements will not only help during interviews but also equip professionals to manage the real world.

We have listed 52 Kubernetes Interview Questions and Detailed Answers in the above article. Hope it helps you’ll be better prepared to demonstrate both theoretical knowledge and practical insights.

To keep up with the rapid technological changes, you can sign up for Skilltrans courses. You should know that our courses also cover a wide range of other topics.

Meet Hoang Duyen, an experienced SEO Specialist with a proven track record in driving organic growth and boosting online visibility. She has honed her skills in keyword research, on-page optimization, and technical SEO. Her expertise lies in crafting data-driven strategies that not only improve search engine rankings but also deliver tangible results for businesses.